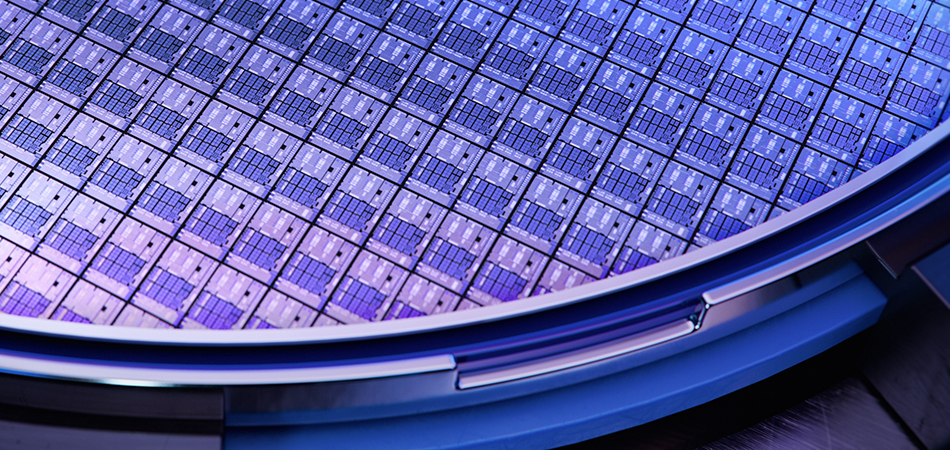

For decades, the “memory wall” has been one of the most formidable challenges in computing. As processors have grown faster and more powerful, memory bandwidth and latency have not kept pace. This widening gap has created a bottleneck, limiting the full potential of computational systems. Traditional architectures, reliant on discrete memory modules connected through lengthy interconnects, inherently suffer from delays and limited bandwidth. In response to this long-standing problem, the industry is turning toward a groundbreaking solution: wafer scale memory.

What is Wafer Scale Memory?

Wafer scale memory represents a radical shift from conventional chip-based designs. Instead of fabricating individual memory chips and packaging them separately, wafer scale memory utilizes entire silicon wafers populated densely with memory arrays. These wafers can be stacked vertically in multiple layers, using advanced 3D integration techniques such as through-silicon vias (TSVs) and micro-bump interconnects.

The result is a monolithic memory structure with extremely short communication paths between compute cores and memory cells. By bringing memory physically closer to the processing units and stacking it directly on the silicon substrate, wafer scale memory effectively eliminates the latency penalties and bandwidth limitations imposed by traditional architectures.

Breaking the Memory Wall

The advantages of wafer scale memory are profound. First and foremost, it drastically increases memory bandwidth. With thousands of interconnects between compute and memory layers, data can flow at rates unattainable by conventional systems relying on external memory buses.

Second, it significantly reduces latency. Traditional systems suffer from delays caused by the physical distance between memory and processors. Wafer scale memory minimizes these distances, allowing near-instantaneous data access and retrieval.

Third, wafer scale memory enhances energy efficiency. Reduced signaling distances mean lower power consumption per bit transferred. For AI workloads, where memory access dominates power budgets, this leads to substantial gains in performance-per-watt.

Applications and Impact

Wafer scale memory is poised to revolutionize a wide range of computational fields. In AI and machine learning, models are growing exponentially in size. Training state-of-the-art models like GPT-4 or developing next-generation vision-language models (VLMs) demands memory capacities and bandwidths that strain traditional architectures. Wafer scale memory allows these models to be trained and inferred more efficiently, reducing both time and cost.

In high-performance computing (HPC), scientific simulations in genomics, climate modeling, and quantum chemistry require vast memory resources. Wafer scale memory can accommodate larger datasets directly on-silicon, enabling faster simulations and more accurate results.

The Future of Computing

As data volumes continue to grow and AI models become more complex, the demand for memory bandwidth and low-latency access will only intensify. Wafer scale memory represents a fundamental evolution in system architecture, offering a path forward beyond the limitations of Moore’s Law.

By breaking the memory wall, wafer scale memory unlocks the next era of computing performance—one where data-intensive applications can operate at unprecedented speed and scale. It is not just an incremental improvement; it is a paradigm shift that will redefine what is possible in AI, HPC, and beyond.