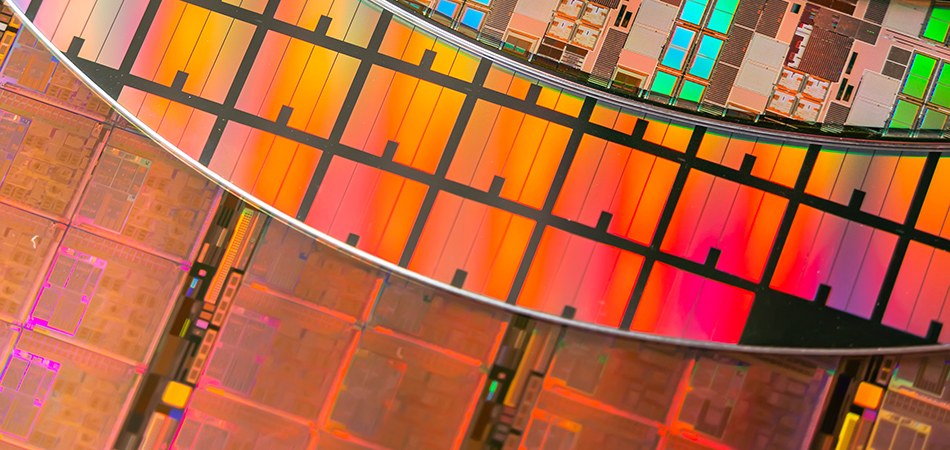

In the relentless march toward Artificial General Intelligence (AGI), the bottleneck has increasingly shifted from computational throughput to memory bandwidth and latency. Traditional systems, no matter how optimized, are bound by the constraints of chip-scale integration and discrete memory architectures. Recognizing this barrier, EinsNeXT introduces WISE (Wafer-Intelligent Stacking Engine), a revolutionary technology that seamlessly integrates compute and memory at the wafer scale, poised to redefine the landscape of AI and high-performance computing.

Breaking the Memory Wall

For decades, Moore’s Law delivered exponential gains in computational performance. However, memory bandwidth has not kept pace. While compute units have become increasingly powerful, the memory wall—the growing disparity between processor speed and memory speed—has throttled overall system performance. Traditional solutions like High-Bandwidth Memory (HBM) have provided incremental improvements but remain constrained by chip-scale physical limits and the overhead of packaging and interconnects.

WISE addresses this challenge head-on by employing wafer-scale integration and 3D wafer-on-wafer stacking. Rather than confining memory to discrete packages, WISE embeds massive arrays of high-bandwidth memory directly onto the wafer, stacked vertically in multiple layers. This approach dramatically shortens data pathways, reduces latency, and provides unprecedented memory bandwidth.

The Architecture of WISE

At its core, WISE combines several breakthrough technologies:

Wafer-Scale Compute:

A full silicon wafer acts as a monolithic accelerator, hosting tens of thousands of AI cores operating in parallel.

Wafer-on-Wafer Memory Stacking:

Multiple wafers of high-bandwidth memory are vertically stacked above the compute wafer. Advanced through-silicon via (TSV) technologies and ultra-dense microbumps ensure low-resistance, high-speed data transfer between layers.

3D Heterogeneous Integration:

By integrating compute, memory, and specialized accelerators in a 3D stack, WISE enables domain-specific optimizations, tailored for workloads like LLMs (Large Language Models), VLMs (Vision-Language Models), robotics, and complex scientific simulations.

Intelligent Interconnects:

A high-speed interconnect fabric is woven across the wafer, ensuring ultra-low-latency communication not just within a layer but across stacked layers, maximizing parallelism and efficiency.

Key Advantages

1. Massive Memory Bandwidth

With memory integrated at the wafer scale and stacked in multiple layers, WISE offers memory bandwidth orders of magnitude greater than current HBM3E solutions. This enables real-time processing of trillion-parameter models that would otherwise be infeasible due to memory bottlenecks.

2. Ultra-Low Latency

By eliminating the need for long interconnects and discrete memory modules, WISE achieves latency reductions unattainable by traditional architectures. Data travels nanometers instead of millimeters, cutting round-trip times dramatically.

3. Scalability

WISE’s architecture inherently scales. Need more memory? Stack more memory wafers. Need more compute? Integrate additional AI cores across the wafer. This modularity allows WISE to meet the demands of future workloads without the architectural limitations of today’s multi-chip systems.

4. Energy Efficiency

Shorter data pathways and reduced signaling overhead lead to substantial energy savings. For AI workloads, where memory access dominates energy consumption, WISE offers a breakthrough in performance-per-watt.

5. Simplified Software Stack

With WISE, large models no longer need complex model parallelism, pipeline parallelism, or tensor slicing. Developers can train and infer giant models without dealing with the intricacies of data sharding, reducing software complexity and accelerating time to deployment.

Transforming AI Workloads

Large Language Models (LLMs)

Training and inference of LLMs like GPT-4 and beyond require memory footprints in the range of terabytes. Current systems split these models across hundreds or thousands of GPUs, introducing communication overhead and complexity. With WISE, a trillion-parameter model can be hosted entirely on a single wafer-scale system, eliminating these bottlenecks and reducing training times from months to weeks.

Vision-Language Models (VLMs)

VLMs demand simultaneous processing of large-scale vision and language data, often necessitating high memory bandwidth and low latency. WISE’s architecture is ideally suited to these workloads, enabling faster convergence and higher model fidelity.

Robotics and Real-Time AI

For real-world AI applications like autonomous driving, robotics, and edge AI, latency is critical. The ultra-low-latency design of WISE opens the door to real-time inference and decision-making, pushing the boundaries of what’s possible in autonomous systems.

Scientific Simulations and AGI Research

Complex simulations in fields like genomics, climatology, and physics require massive computational resources and memory bandwidth. WISE enables simulations at an unprecedented scale, bringing us closer to AGI by allowing models to reason over vastly larger datasets and more complex domains.

Setting a New Benchmark for AGI

AGI requires the ability to learn, adapt, and reason across domains—capabilities that demand unprecedented compute and memory performance. WISE, by eliminating traditional memory bottlenecks and maximizing parallelism, sets a new standard for what’s achievable in AI computing.

Imagine AGI systems capable of handling full-length movies as context, reasoning over entire medical datasets in real-time, or instantly simulating the implications of global policy changes. With WISE’s massive memory bandwidth and low-latency compute, these scenarios move from theoretical to practical.

Future Outlook

As models grow larger and datasets more complex, the need for architectures like WISE will only intensify. EinsNeXT is already looking beyond 2D wafer stacks to 3D wafer pyramids, further increasing density and bandwidth. Innovations in TSV density, thermal management, and interconnect fabrics will continue to enhance WISE’s capabilities.

In parallel, software ecosystems will evolve to exploit WISE’s hardware advantages, simplifying the development of ultra-large models and democratizing access to world-class AI capabilities.

Conclusion

WISE (Wafer-Intelligent Stacking Engine) represents a paradigm shift in AI and high-performance computing. By seamlessly integrating compute and memory at the wafer scale, WISE shatters the memory wall, delivering unmatched efficiency, scalability, and acceleration. In doing so, it lays the foundation for the next generation of AI—from LLMs and VLMs to robotics and AGI—propelling humanity into a future where intelligence is truly unbounded.

With WISE, the future isn’t just faster. It’s fundamentally smarter.