In the evolving landscape of AI and high-performance computing (HPC), the strategies of scale-up and scale-out are fundamental to meeting the escalating demands of data-intensive workloads. Traditionally, scale-up involves enhancing the capabilities of a single system by adding more memory, compute power, or storage, while scale-out focuses on expanding system capacity by connecting multiple systems in a distributed architecture. However, both approaches face limitations due to the bottleneck created by conventional memory architectures. Enter WISE (Wafer Intelligent Stacking Engine), a transformative technology that redefines the paradigms of scaling by offering 2TB of integrated wafer-scale memory.

Scaling Up with WISE

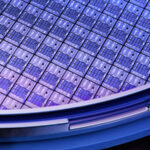

WISE revolutionizes the concept of scale-up by dramatically enhancing the memory capacity and bandwidth within a single system. With of wafer-scale, 3D-stacked high-bandwidth memory directly integrated onto the silicon wafer, systems can now handle datasets and model parameters that were previously impossible to accommodate within a single node.

This massive on-silicon memory eliminates the traditional memory wall, providing ultra-low latency and ultra-high bandwidth access to data. It enables real-time processing of trillion-parameter models and massive datasets without the overhead of data sharding or multi-node synchronization. For AI training, scientific simulations, and large-scale analytics, WISE allows unprecedented scale-up capabilities, simplifying system architecture and drastically reducing complexity.

Scaling Out with WISE

While WISE provides remarkable scale-up capabilities, it equally empowers scale-out strategies. By deploying multiple WISE-enabled nodes, each equipped with of integrated memory, organizations can build supercomputing clusters with a drastically reduced number of nodes compared to traditional architectures.

This not only reduces the physical footprint and power consumption of data centers but also minimizes the communication overhead that typically hampers distributed systems. High-bandwidth, low-latency interconnects between WISE nodes facilitate efficient parallel processing across the cluster, enabling workloads like distributed training of massive language models and real-time analytics on petabyte-scale datasets.

Furthermore, WISE simplifies the software stack required for scale-out deployments. With more memory available per node, fewer nodes are needed, reducing the complexity of distributed computing frameworks and accelerating development and deployment cycles.

The New Standard for Scalability

With WISE’s wafer-scale memory, the traditional trade-offs between scale-up and scale-out are fundamentally altered. Systems can scale vertically to handle massive workloads within a single node, while also scaling horizontally with fewer, more powerful nodes. This hybrid approach unlocks new efficiencies in performance, cost, and energy consumption.

For enterprises pushing the boundaries of AI, HPC, and big data, WISE sets a new benchmark. It transforms data center architecture, enabling faster insights, more accurate models, and the ability to tackle problems once considered beyond reach.

As data and model sizes continue to expand, WISE ensures that organizations are no longer constrained by memory limitations. It heralds a new era where scaling is not just about adding more resources but about intelligent, efficient, and powerful system design—ushering in the future of computing.